Backing up data from App Engine with appcfg.py

Even if you trust App Engine to keep your app data safe, you might still want to download a local copy. Why? Because you will want to test your app locally, keeping a separate local development version.

Even if no-one uses your app yet, it's better not to always update the live version. It's just slow.

If you have a local copy of your data, you can run your app in the local app dev server and see changes immediately without deploying the app every time.

How do I get a local copy of my data?

After you've installed the App Engine SDK locally, you have a tool called appcfg.py.

For instance on my computer the tool got installed in /usr/local/bin/appcfg.py

It sits conveniently in my path already, so I can just run it as "appcfg.py" from my command line without giving the full path.

That tool has several "actions" (sub-commands), as besides taking backups it is used for things like deploying the app. The relevant action here is called download_data.

How do I download my data with appcfg.py?

The action is given as the first argument to appcfg.py. In this case the relevant action is download_data.

appcfg.py download_data . --filename=data_store_backup

The following builtin needs to be enabled in your app.yaml. Add this snippet:

builtins:

- remote_api: on

Running the command should now initiate a backup. Except if you run into problem with entering your login details.

Getting credentials for appcfg.py

If you got this error

oauth2client.client.ApplicationDefaultCredentialsError: The Application Default Credentials are not available. They are available if running in Google Compute Engine. Otherwise, the environment variable GOOGLE_APPLICATION_CREDENTIALS must be defined pointing to a file defining the credentials. See https://developers.google.com/accounts/docs/application-default-credentials for more information.

Then you need to get a credentials file and make it visible to appcfg.py via an environment variable. However I'd suggest not following the instructions in the error message, as at least for me they just led to another error:

2017-06-15 14:23:59,331 INFO client.py:546 Attempting refresh to obtain initial access_token

2017-06-15 14:23:59,372 INFO client.py:804 Refreshing access_token

2017-06-15 14:24:01,114 INFO client.py:578 Refreshing due to a 401 (attempt 1/2)

2017-06-15 14:24:01,154 INFO client.py:804 Refreshing access_token

2017-06-15 14:24:01,614 INFO client.py:578 Refreshing due to a 401 (attempt 2/2)

2017-06-15 14:24:01,655 INFO client.py:804 Refreshing access_token

2017-06-15 14:24:02,169 INFO client.py:578 Refreshing due to a 401 (attempt 1/2)

2017-06-15 14:24:02,210 INFO client.py:804 Refreshing access_token

...

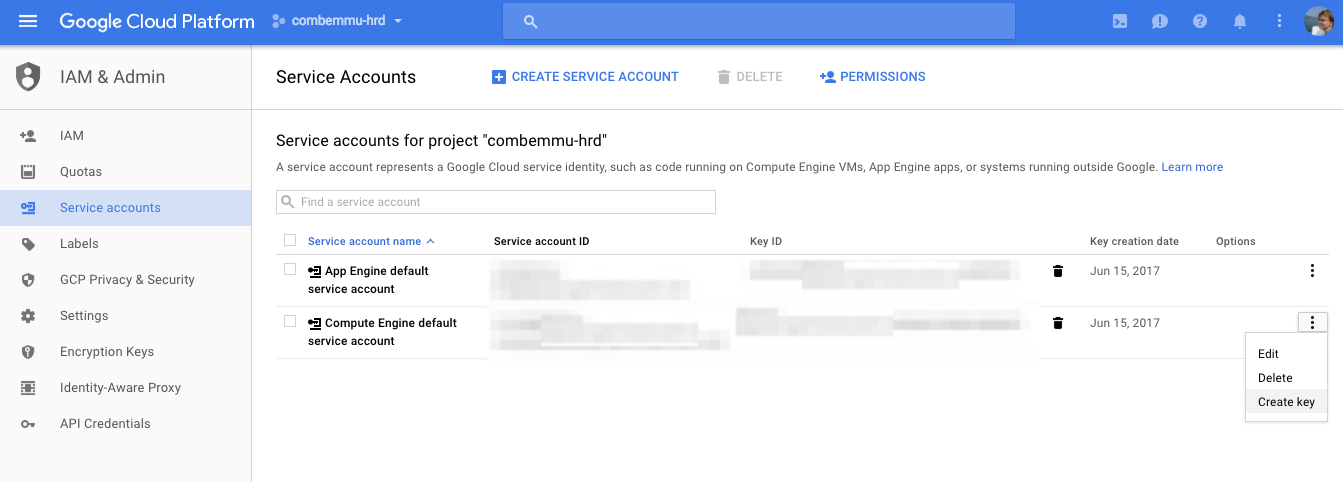

Instead go to IAM service accounts. Next to "Compute Engine default service account" select "Create key".

Select JSON.

You get a file with your credentials on it. Now you can put this in your bashrc file, but to just try things out I saved it with the project and then created a "backup" script which uses it like this:

GOOGLE_APPLICATION_CREDENTIALS=compute-key.json appcfg.py download_data . --filename=data_store_backup

That sets the environment variable before calling appcfg.py. Run this script and the backup should start.

Hitting a 404?

If you get an error like the following:

...

File "/Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/urllib2.py", line 409, in _call_chain

result = func(*args)

File "/Library/Frameworks/Python.framework/Versions/2.7/lib/python2.7/urllib2.py", line 558, in http_error_default

raise HTTPError(req.get_full_url(), code, msg, hdrs, fp)

HTTPError: HTTP Error 404: Not Found

[ERROR ] Authentication Failed: Incorrect credentials or unsupported authentication type (e.g. OpenId).

It means your appcfg.py is too old.

I found this out the hard way by stepping through the files mentioned in the error message, and discovering that my appcfg.py was hitting some old ClientLogin API that is deprecated.

Installing the latest Cloud Tools version (159.0.0 as I write this) made the problem go away.

Uploading data to localhost

If you have dev_appserver.py running on port 7219 and your backup data in a file called data_store_backup, you can upload the data to localhost as follows:

appcfg.py upload_data \

--url=http://localhost:7219/_ah/remote_api \

--filename=data_store_backup \

--batch_size=256

In reality if you try this, you may hit an error like this:

client.py:578 Refreshing due to a 401 (attempt 1/2)

client.py:804 Refreshing access_token

The consensus online seems to be that to get around this, the most practical thing to do is to hack your local dev server to bypass admin check. To do this, you first need to find the following file:

google-cloud-sdk/google-cloud-sdk/platform/google_appengine/google/appengine/ext/remote_api/handler.py

If you can't figure out where that file is in your file system, you can print out the location from a local app engine app:

import google.appengine.ext.remote_api

self.response.write(google.appengine.ext.remote_api.__file__)

Once you have the file open, find the function CheckIsAdmin in that file and just make it always return True. Now upload should work.